Text2AugmentedRealty

This test, from concept to production model, took 40mins!

Clean up was needed for the retopology, but I wrote a houdini workflow for remeshing, autoUVs, autorigging, and skeleton retargeting all in one place.

I had seen AI auto skinning and animation, but my tests needed too much keyframe cleanup, so I ditched it. This will improve tho, and I can imagine in the future it being the standary for 3d character generation.

The newer AI models can generate PBR materials, so if I can get segmentation working in COPs, all the texture upscaling and cleanup/editing can be done in Houdini, without needing substance painter.

Seriously Impressive!

Gaussian Splats

I’ve been experimenting with processinig and publishing a new type of 3d pointcloud called Gaussian splatting for webview. Unlike conventional pointclouds or even photogrammetry-derived assets, Gaussian splats can refine detail like text and graphics and even thin geometry (trees) in 3d space like nothing else! reflections in glass and metals can also be emulated with surprising accuracy.

The real magic lies in the splatting pipeline’s ability to dynamically adjust density and detail, enabling both ultra-high-resolution close-ups and performant distant views without the overhead of traditional geometry. Inputs are sharp image sequences or video. No LIDAR needed!

What excites me most is how this technology bridges the gap between raw scanned data and real-time rendering. Neural radiance fields (NeRFs) hinted at this future, but Gaussian splats deliver practical, artist-friendly workflows —exportable to game engines, editable via intuitive parameters like opacity and splat decay. Early adopters in VFX and virtual production are already leveraging splatting for near-photorealistic environments with orders-of-magnitude smaller file sizes than equivalent textured meshes.

As GPU optimizations mature, I foresee Gaussian splatting becoming the standard for immersive 3D experiences and volumetric capture, democratizing high-fidelity scanning while freeing artists from the constraints of UV unwrapping and retopology. More to come!

note: these demos are 3D, not videos. take them for a spin (orbit)

genAI - archViz

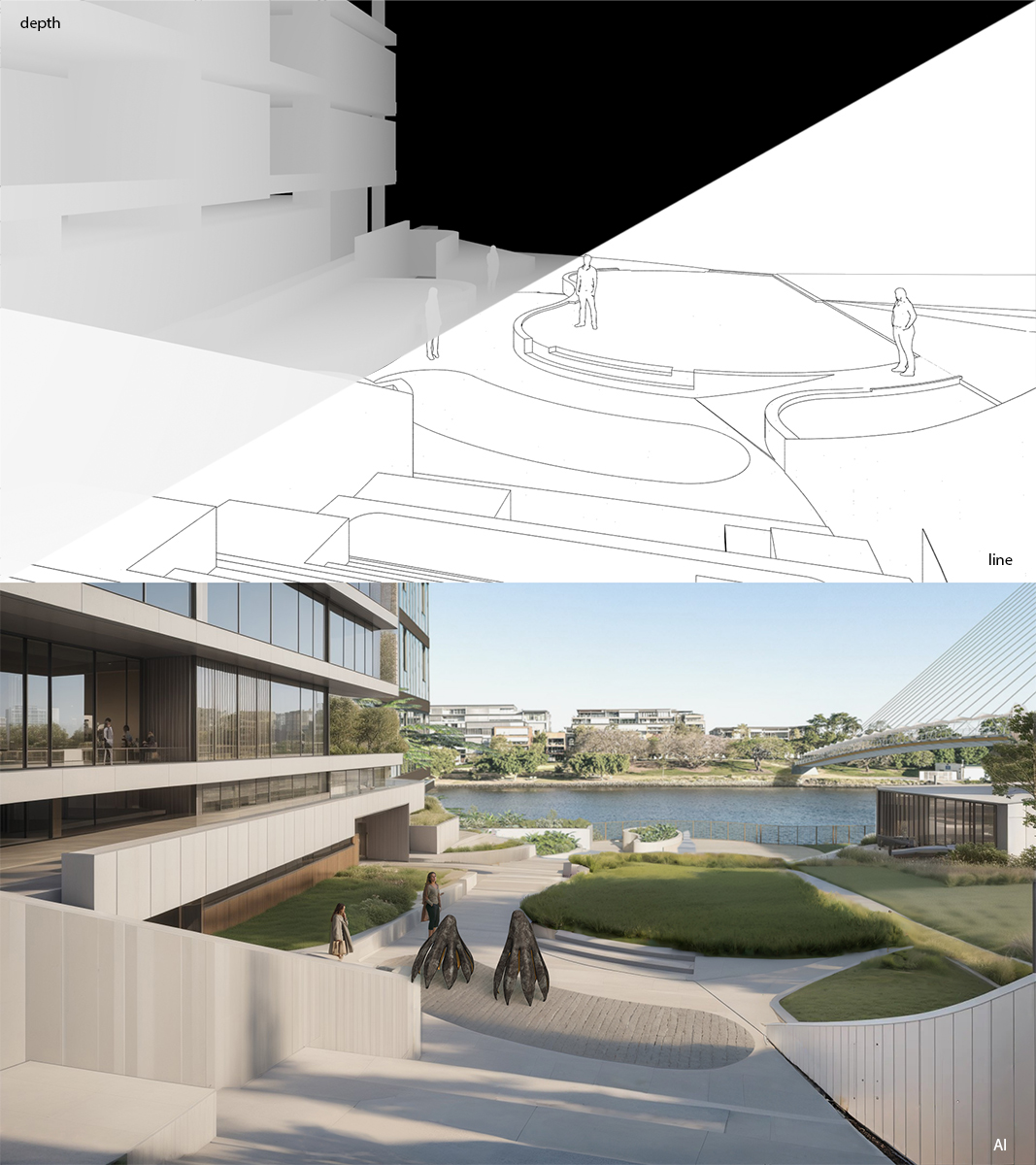

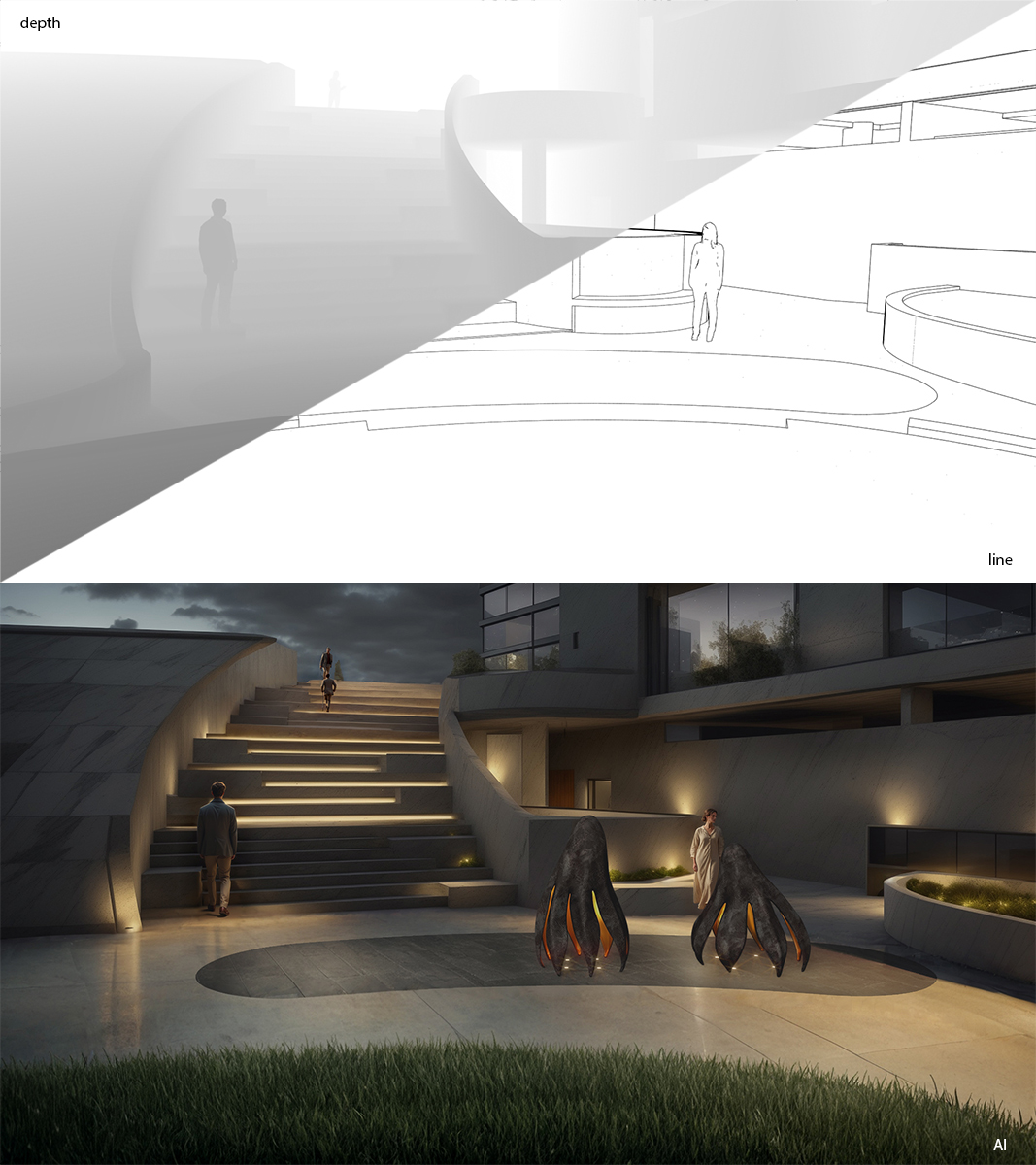

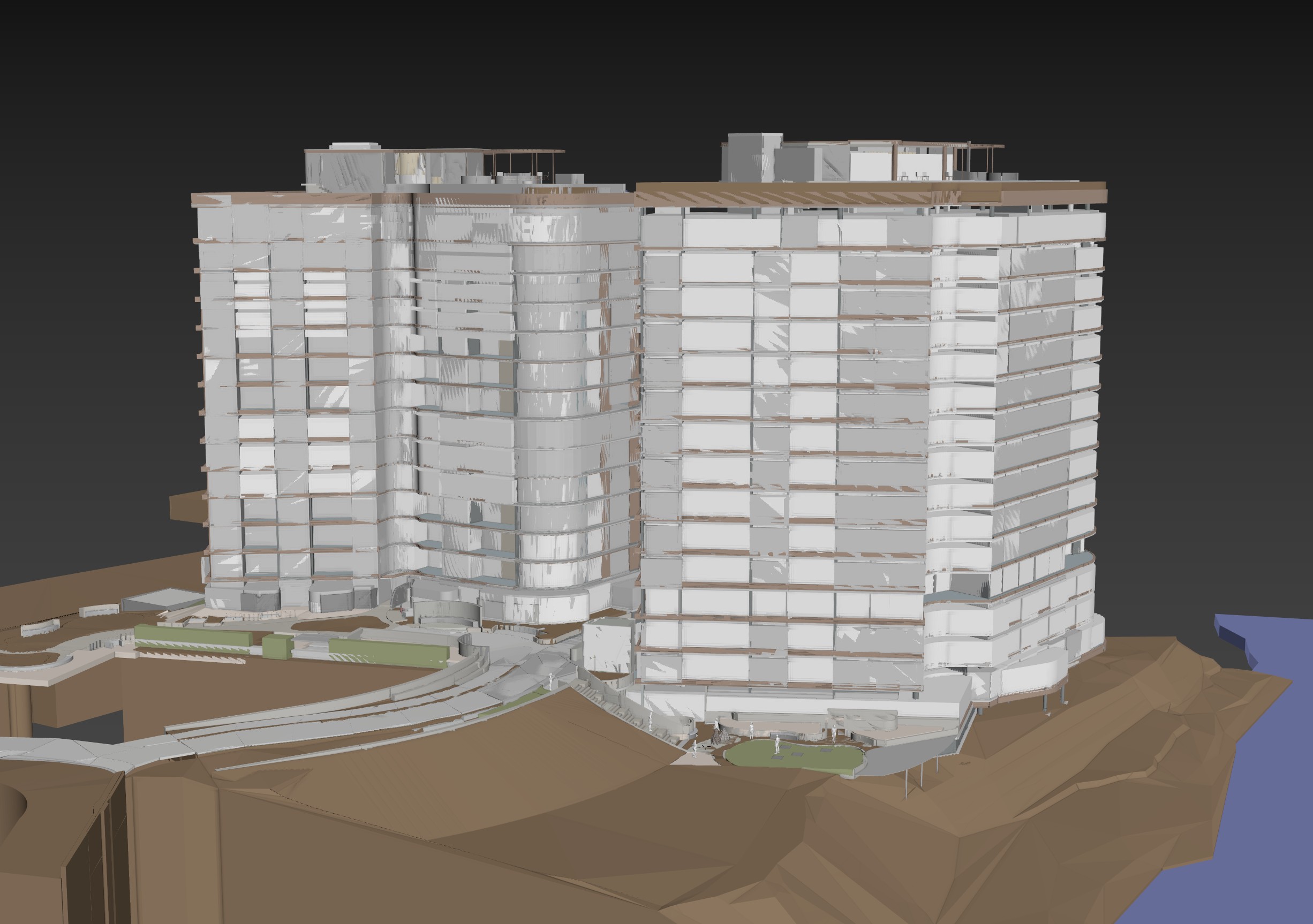

Just using the IFC CAD model, I was able to place some people and camers into the 3d scene and quickly render out a depth and line pass, while foregoing the need to add lighting, materials, shaders and post-processing.

This was then fed into a controlnet with text prompts to output the final renders. The images speak for themselves, while not perfect, they communicate the exterior site effectively enough without too much uncanny valley.

Observations:

- It’s still difficult to control material finishes without a lot fo masking and manual prompting. Next time I’ll rendering out an object mask and see if I can control this and aim for more consistancy with finishes

-

All rendering was done in a day, compared to roughly a week of work doing it manually/”the old fashioned way”

note: the artwork still needed to be rendered using a rendering/lighting system (fstorm) as surface finish and position was important to get precise

AI mocap / Edutech AR

The processed motion data was then retargeted onto custom 3D models and integrated into a WebAR environment using Zappar Matterport enabling users to visualize the animations in real-time via their smartphones. The prototype was designed as an interactive fitness assistant, demonstrating proper gym equipment usage and posture correction without relying on traditional video tutorials—offering a more dynamic, 3D spatial learning experience.

While the project remained in the prototyping phase, it showcased the potential of AI motion capture, procedural animation, and WebAR in creating accessible, immersive training tools. This approach could be expanded into enterprise training, remote coaching, or even physiotherapy applications, highlighting the intersection of XR, AI, and real-world utility.

genAI ArchViz

While there are still some caveats, the minimal time spent and the results are fantastic! These examples took between 5-15 minutes each, once I was happy with my prompts and checkpoint models. No inpainting.

In the future, rendering will be as much a process of combining 3D models, prompt refinement, and materiality, then simply pressing a button.

Have I wasted the last 15 years learning material shaders, lighting systems, and offline rendering?

More tests to come...